FMEA Part 2 - How to create meaningful scores in an FMEA to better understand your project risks

Introduction

Risk assessment is a key component of the FMEA process. It helps you determine how much effort to invest in a particular component and how to prioritize improvements. In this blog post, I'll describe how risk assessment works, how to create meaningful risk scores, and what they mean for your organization.

If you missed FMEA part I which summarizes the entire process, check it out here.

For this session, you will need our FMEA template. Click here for a free download.

At this point in the process, you have:

Identified your core processes (click here for our article on creating a simple SIPOC)

You have brainstormed with your team potential failures in those processes. For processes, think through each element in your SIPOC and focus on what could fail and any key dependencies there might be. For projects, take a look at your project plan and resourcing. Think about deadlines, resource constraints, and upstream/downstream process dependencies. Capture those items in the FMEA template provided.

You are now ready to begin scoring!

Why is FMEA scoring useful?

FMEA allows you to assess risk in a structured way to identify opportunities for process improvements.

A formal FMEA process can be used to assess risks and thereby improve the quality of products and services.

It uses a simple but comprehensive framework that systematically identifies possible causes of failure, then determines their effects on the customer, before finally assigning a risk score for each potential failure mode.

In the following sections, we will walk through Severity, Detectability, and Occurrence scoring in more detail.

Severity is a subjective measure of the effect of a failure.

Severity of a risk can be quantified (example, a fine or penalty, lost revenue, etc...), but always has a subjective scale. For example, if you’re manufacturing an automotive part and a failure occurs in production, it may not be as severe as if the same part was used to make an airplane engine.

Most organizations use some type of severity rating scale when evaluating the potential risk associated with failures. The most common rating scale is called the WBS or “work breakdown structure” scale because it assigns ratings based on how far down in the organization’s hierarchy something will impact its operations. Commonly used numbering schemes include 1-3 for low severity events , 4-6 for medium severity events, 7-10 for high severity events.

Detectability is a subjective measure of how easy it is to detect a failure.

Detectability is the likelihood that someone will notice the failure when they see it, or if they're told about it. For example, suppose you have a product that doesn't work properly when used with another product. This may be considered non-detectable because no one would know if they used both products together. On the other hand, say you have an engine part on a car that fails after 50 miles and causes your vehicle to stop running. In this case, the failure may be detectable because there could be warning lights on your dashboard or some sort of chime from inside the car before shutting down completely.

Detectability is subjective because different people in different situations may not have similar thresholds for what qualifies as “easy” versus “hard" detection; this makes it difficult for teams using FMEAs to make consistent determinations without having detailed discussions about each item at hand! More to come on this…

Occurrence is a subjective measure of how often a failure will happen.

Your team member must determine the occurrence rating for each step in the FMEA. The scale should be consistent across all FMEAs, but there are no industry-wide standards for what these ratings mean; it's up to you as an organization to decide on your own definitions. For example, does a 1 on this rating scale mean that it happens once every 10 years? That it happens once every 5 minutes? Or that it happens at some point during testing?

The only way to make sure you're using your chosen scale consistently is to ensure that everyone who works on FMEAs has access to the same list of definitions and descriptions. Once you setup this rating scale once, you will get a lot of utility from it for future sessions.

Risk priority number (RPN) combines severity, occurrence and detectability in one number.

The Risk Priority Number (RPN) is a measure of the risk of failure. It is calculated by multiplying severity, occurrence and detectability:

Severity (S): The severity of a potential problem is determined by the impact that a failure would have on your business. Keep in mind that a low severity score combined with a high occurrence score might actually result in more risk for your business compared to a high severity, but very little likelihood of occurrence. It seems basic, but teams often get focused on the “end-of-world” scenarios that will most likely never happen, but miss the everyday problems that impact the customer.

Occurrence (O): The occurrence represents how often failures occur during use or in production environments over time—for example: How often does it happen?

Detectability (D): The detectability represents how easy it will be for you to detect problems before they become severe enough to cause downtime or loss-of-life situations—for example: Can we see this problem coming before it becomes extremely expensive?

Severity, detectability, and occurrence ratings need to be calibrated for your organization

As discussed in the sections above, calibration of the severity, detectability, and occurrence ratings is crucial to the process.

First, you want your risk scores to provide useful information about whether a process or product is likely to have a safety or quality failure. If your team uses an inappropriate severity rating scale, then some risks may be overrated while others may be understated.

Second, when calibrating scores with management and/or customers it will be easier for them to understand if you use the same rating scales that they are used to using for other projects within their organizations.

Thirdly, it makes sense that if two organizations have different scales for their severity ratings then there will be differences in their results. In this scenario, you should just seek to ensure the scales are directionally in sync. If they aren’t, it is a indication that the organization might not be properly aligned regarding priorities or perception of risk.

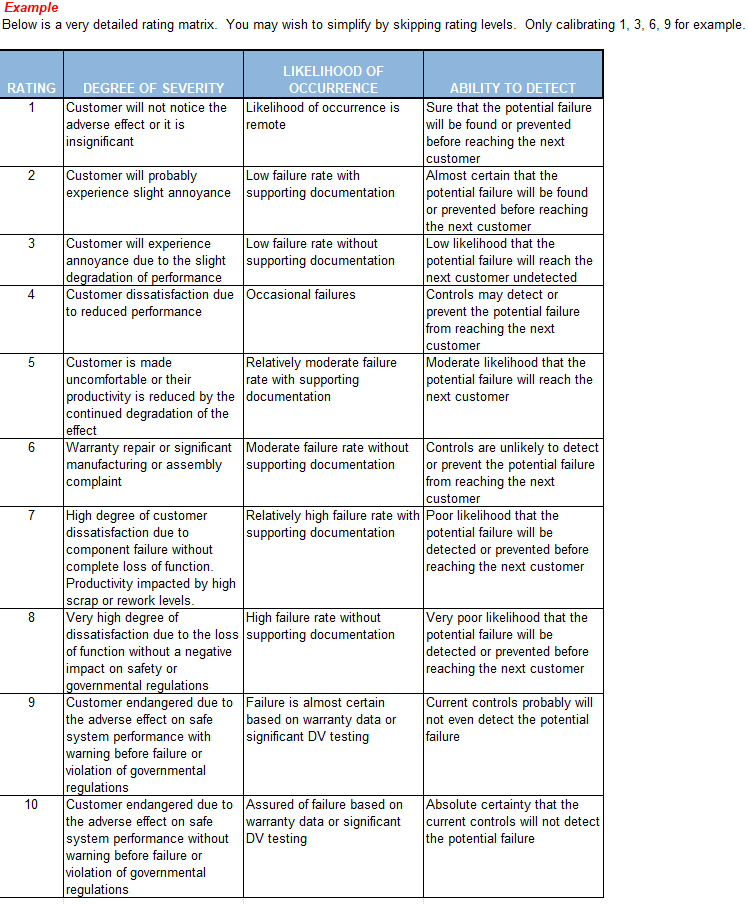

Below is an example from our FMEA template showing risk ratings after calibration. Obviously, each rating and description needs to be updated for your business. Having your team aligned upfront on these scales is absolutely critical for your FMEA exercise to be successful. It is worth a bit of time upfront to set this up. You can then leverage it for other FMEAs in the future.

Now that the background stuff is behind us, let’s do this! Follow the steps below once your calibration is completed.

Step 1

Fill out the severity scores with your team

In order to create meaningful risk scores, your team should fill out the severity scores together. The severity of a potential failure can be rated on a scale from 1 to 10. The higher the number, the more severe it is. Be honest and practical in your scoring. Unrealistic scoring will be evident and will make your FMEA less credible.

In the template, fill out the "Potential Causes". This isn't meant to be a scientific, data driven exercise. It is simply the team’s initial thoughts around why the failure might happen. Bring in a Six Sigma Black-Belt if you want to mathematically prove which variables are driving your failures. However, this is often overkill for most finance processes. Subject matter experts will likely provide tremendous insight very quickly here. When you brainstorm recommended actions later in the template, inputs here will be extremely valuable.

Pro tip: Sometimes risk scoring can create a homogenous blob of high risk results with no clear items to focus on. In order to create greater differentiation between risk scoring, some FMEA authors may choose to force scoring to fall into certain extreme buckets. Example, 1, 3, 9 scores only. It will make the team think twice before assigning a 9 to anything because it will make that item pop. That said, if the team agrees that is the right score, that risk likely deserves a lot of attention. Be prepared to justify your scoring with senior leaders!

Step 2

Fill out the occurrence scores with your team

For each of the nine steps in an FMEA, you'll need to assign an occurrence score from 1 to 10. This is a rating that indicates how likely it is for this step to fail. You can make these scores based on the historical data from your business or estimates from your team. Because these scores may be subjective, they should reflect the opinions of all stakeholders, so be sure to include people other than yourself when assigning them.

The template asks you to identify current controls (if any). For projects, this might be formal milestone reviews. For financial processes, these might be formal SOX controls, etc...

Step 3

Fill out the detectability scores with your team

Once again, as a team fill out the detectability score from 1 to 10 for each Potential Failure Mode. The most important thing is to understand why detectability scores are so important in the FMEA. You want to make sure that the first time the problem or failure is discovered by anyone is in the FMEA, not through customer complaints, an audit, or problems with production. If a failure can only be detected by the customer (internal or external), then that is the worst case scenario and should receive a high (meaning poor) detectability score. Ultimately, be brutally honest here. Don’t just assume someone will “catch” the problem. If there isn’t a measurement or review process in place, it won’t get caught proactively. During the risk mitigation step (future article), there are often quick wins that come out of detectability. Implementing controls and review processes are generally an easy way to reduce RPN scores.

Step 4

Assess the RPN scores. Do they make sense to your team?

After completing the analysis, it’s important to assess the RPN scores. You want to make sure that they are in line with your risk tolerance and that you can trust them. If not, then you will need to adjust them accordingly. It is critical to put yourself in the shoes of any other senior managers who will review. Are the scores credible?

High scores require immediate attention and mitigation because they represent an imminent risk of failure.

Low scores should be sanity checked to ensure there is no underlying issue (i.e., a known process problem).

Below is an example of the FMEA with example scores filled out. Make sure to download the excel template.

Conclusion

RPNs are a great way to measure the risk of failures in your product or service. RPNs allow you to assess risk in a structured way and identify opportunities for process improvements. The challenge is to develop scores that make sense for your organization’s use case and values. To do this, we recommend using an FMEA template as a starting point and calibrating severity, occurrence and detectability ratings based on your own experience with each type of failure.

Apollonian Consulting is here to guide your organization through this FMEA journey to mitigate risk. Click HERE to connect with us!

In our next article, we will cover how to create an action plan to mitigate risks and how to perform a preliminary re-score of your RPNs. Stay tuned!